The short answer to the title is: Accessibility is not an average.

For a longer answer, read on.

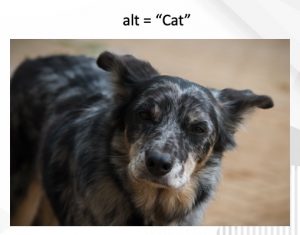

When I run training a common refrain is: Computers don’t know what is appropriate. I illustrate that with a picture of a dog, and showing an alt text of “cat”.

Historically accessibility testing tools would not pick that up. The image has an alternative text, it isn’t the same as the file name, so by an automated test that’s ok.

With machine learning (ML) you could, at least in theory, feed images with good alt text into a model and use that to test whether the alt-text of “cat” is good. Hopefully it would say no. I haven’t seen that work in practice, but I can accept it is possible.

Now there are tools for producing (or co-producing) code, e.g. Co-Pilot. There are even some that claim to output accessible code.

I won’t claim to be an expert in Artificial Intelligence, ML, or Large Language Models (LLMs), but there is a fairly understandable way to explain why it won’t work for accessibility. The process for generating the code is something like:

- A corpus of information is fed into a model. For example, you could feed it code on the open web, or from code repositories.

- The model is analogous to a statistical model, so it is looking at what things statistically go together.

- Based on a prompt, the model will output things that are likely answers to that prompt.

There are two reasons such a model would not output accessible code:

- Most code is not accessible.

- An average of reasonably accessible code might not work if it uses a statistical model (rather than meaning) to decide between component types.

I don’t think anyone will doubt the first reason. The WebAim analysis of a million homepages is pretty good evidence that most code is not accessible. Nomensa’s experience with running audits aligns with that.

Wherever you take an average, or a ‘most likely next thing’ based on the average webpage, it will not output accessible code because the average is not accessible.

The second reason I’m less confident of, but I’m thinking of examples where you might take different approach to a particular component, and a model might merge them together creating a very confusing experience. For example, it might include a smattering of ARIA attributes because they are used by some sites, but they are unlikely to be appropriately used.

I’m not sure these are solvable problems. For example, if you took a smaller corpus of known-accessible examples to feed the model, would it be large enough to work? Also, would it be better than using a decision tree and a component library? I’m sceptical.

Accessibility is by definition non-typical usage, therefore applying an average does not work.

For further reading, I’d recommend Adrian’s articles on AI fixing accessibility, and AI generated alt text.

If you haven’t seen it, check out the demo of the Evinced code assistant which starts around 15:00 https://www.youtube.com/watch?v=gYTbMm_Q4CY

They’ve found a way to understand the context of the developer’s intent and suggest appropriate accessible code. It’s a single use case demo, but it looks promising.