The CSUN ATC conference is one of the largest accessibility related conferences in the world. (It stands for “California State University in Northridge”, the conference name is actually “Assistive Technology Conference”.)

I’m going to give my impressions of the conference and talks in roughly chronological order, so read on if you are curious or just want links to lots of new accessibility resources.

I last went to the CSUN conference back in 2014 (roundup), so it’s been a while. I have to admit that San Diego was a nicer location, but then we’re here for the people, so a hotel in Anaheim isn’t the end of the world.

Accessibility Guidelines Working Group

On the Monday we met to do a retro (how have we been working, can we improve etc.), and then discussed how to define “views”. We need a unit of conformance for use in the guidelines and conformance statements, and web-page is getting increasingly difficult to use for this.

After a long discussion going through the pitfalls of the previous definitions (page and view), we ended up going with UI-context.

Keynote

Gabrielle Giffords was the keynote speaker, who after a very traumatic injury had to re-learn how to talk. It was run as an interview by her speech therapist who could also fill in some of the medical and therapeutic aspects. It was so popular that I ended up in an overflow room watching a big screen.

Accessibility in the AI ecosystem

Jenny Lay-Flurrie gave a little history, both of accessibility innovations (e.g. toothbrushes originally being invented for people with mobility impairments) and her own (starting at MS on Hotmail).

Microsoft’s accessibility efforts use a hub and spoke model, where each division is responsible for accessibility, with a central team to support. The need to meet compliance (e.g. EAA deadline is 109 days away), but that is the floor, not the ceiling.

Training on accessibility is mandatory, and some is available publicly for free. But they like to go beyond that, for example, including options in games for people afraid of spiders, and she showed a slider that would reduce the realism of an in-game spider.

As per the title, there was a long section on how MS is using AI to improve their services. Part of that was for Be my eyes, who are using AI to answer questions through the Disability Answer Desk, and 70% of questions could be answered by AI, and more quickly.

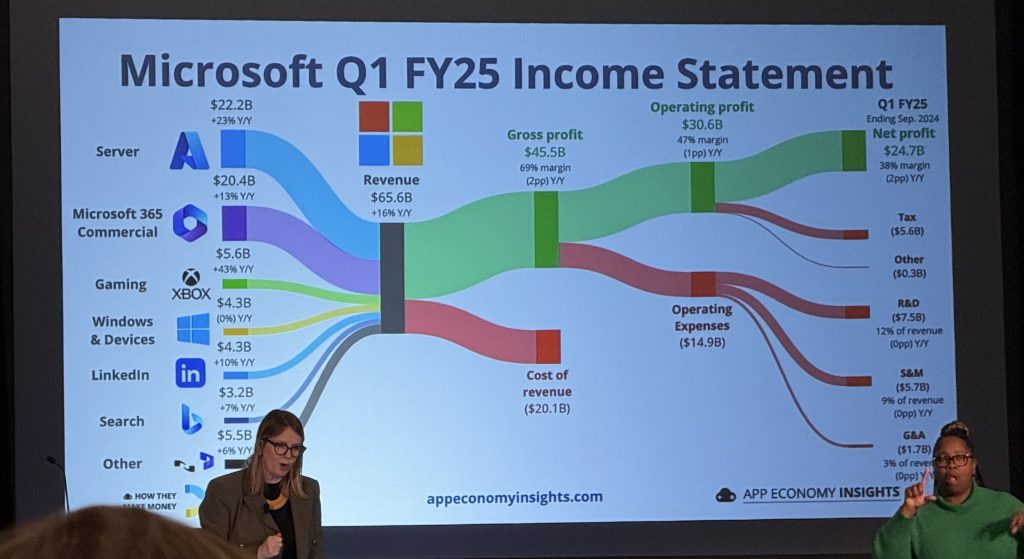

Jenny pulled up a gnarly chart and challenged the audience to run it though a AI assistant to see what it came up with.

The image I fed into ChatGPT:

Chat GPT’s output:

- Total Revenue: $65.6B (+16% YoY)

- Major revenue sources:

- Server (Azure, etc.): $22.2B (+23% YoY)

- Microsoft 365 Commercial: $20.4B (+13% YoY)

- Gaming (Xbox): $5.6B (+43% YoY)

- Windows & Devices: $4.3B (0% YoY)

- LinkedIn: $3.2B (+7% YoY)

- Search (Bing, etc.): $3.2B (+7% YoY)

- Other: $5.5B (+6% YoY)

Cost & Profit Breakdown:

- Gross Profit: $45.5B (69% margin, +2pp YoY)

- Cost of Revenue: $20.1B

- Operating Expenses: $14.9B

- Operating Profit: $30.6B (47% margin, +1pp YoY)

- Net Profit: $24.7B (38% margin, +2pp YoY)

Expense Breakdown:

- Tax: $5.6B

- Other: $0.3B

- R&D: $7.5B (12% of revenue, flat YoY)

- S&M (Sales & Marketing): $5.7B (9% of revenue, flat YoY)

- G&A (General & Administrative): $1.7B (3% of revenue)

The company achieved solid revenue growth driven by significant increases in Gaming (+43%) and Server (+23%) segments, resulting in strong operating and net profit margins.

That seems good, I couldn’t spot any mistakes or misinterpretation.

There are also other things coming, like live audio description (in Seeing AI), and working with other companies to improve speech recognition for people with speech-impacting disabilities, and a “speak for me” project.

Avoiding the pitfalls of shift-left

Another Microsoft presentation, with three obviously knowledgeable and engaging speakers (Alli Hirt, Erin Lucas, Manika Malhotra). However, I struggled with it a bit. It’s a very wide topic, so it was necessarily quite high level. I felt like I know that you need things like prioritization techniques, but without the detail on how to achieve those goals I didn’t get much out of it.

Probably a good one for change-managers (or other managers), less so for practitioners.

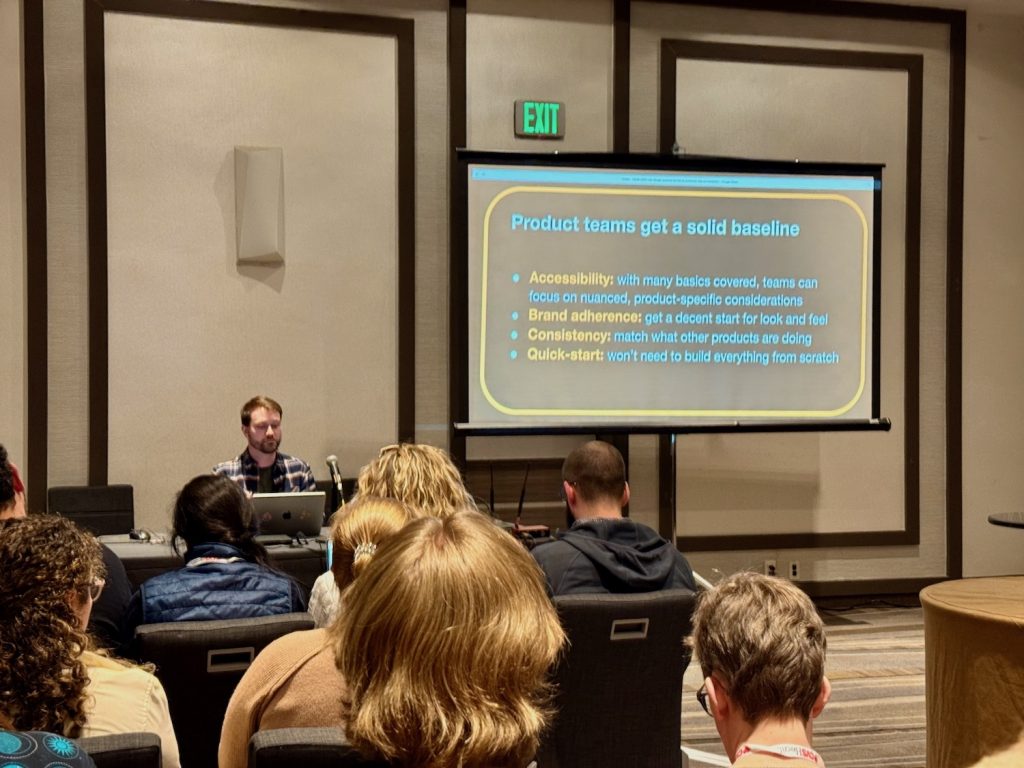

Sustained web accessibility (design systems)

David Cox presented about design systems as a way to improve accessibility, but with some research and testing to see whether it actually has made a difference for companies with a design system.

It involved a good overview of when design systems should help (e.g. where you have large or multiple teams and have the budget to do it), and what success factors to look for.

It also included research (including a large spreadsheet) about how well some well-known design systems do, and how accessible the corporate homepage is. The correlation was not good, as in, a good design system wasn’t a good predictor of homepage accessibility.

However, there are a lot of confounding variables, such as whether the people creating the homepage even use the design system!

Anecdotally, if we (Nomensa) are testing a UK Government website that uses the GDS components (properly), we either half the cost or increase the scope compared to a similar site not using the component system. If we have far fewer issues to find and report it simply doesn’t take as long, and the design-system usage is a strong signal that will be the case.

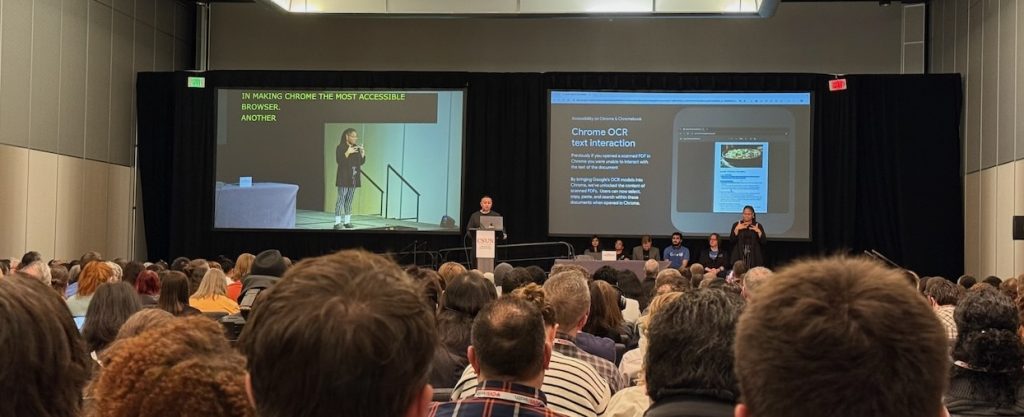

Google keynote

This was a rattle through new and upcoming features of interest:

- Live captions – can now include vocal emphasis and things for sound effects, and live transcribe can work offline.

- Hearing aids – UX improvements such as making the battery info easier to find.

- Android Lookout – reads out details of the real world, improved OCR, and guidance on framing for selfies. You can also go into a mode where you pick something you’re looking for, wave your phone around and it helps you find it.

- Talkback – added image descriptions with Gemini AI, for any image on your screen. It’s automatic for unlabelled images (on the Pixel 9), or activate manually from any phone.

- Contrast adaptions (Android 15) – can make it brighter, outline text, add a solid background, and preserve the colours of links.

- Face-control (Chromebooks) – device face tracking as a pointer mechanism.

- PDFs – scanned PDFs are automatically OCRed. They mentioned something about PDFs previously being rendered as canvas elements, and that is changing. However, I wasn’t clear if that was for all PDFs, or just scanned and OCRed PDFs.

- Read aloud (Chrome browser) – built into Chrome now on Chromebooks, coming to Mac and Windows soon.

- Improved image descriptions (Chrome) – E.g. before it was “art, graphic”, now “art, appears to be bowl of fresh fruits in a bowl”.

- Page zoom (Chrome on Android) – can now set text-size as well as (pinch to) zoom.

- Google drive – Dark mode coming soon.

- Maps – improved walking instructions, and a “lens” icon to show features around you such as ATMs.

- Google Wallet – improved instructions for scanning IDs.

These and more at the Google accessibility resource hub.

Practical research

By Kavana Ramesh, UX researcher at Fable. The premise was that people are intimidated by including people with disabilities in research. It can be new, more complex, and you need to adjust methodology. There are organisational barriers, not just barriers for individuals, but the focus of this talk is the individual barriers.

There was a “build a burger” metaphor, which I didn’t find that helpful, but injected a little fun. There was lots of good advice on running research with people with disabilities. The only area I might pick up on was a comment around “Make sure you allow the person to be the expert of their AT”, which is true up to a point. However, there is a massive variety of expertise with assistive technology, and I would aim to included non-expert participants as well and you have to account for participants knowledge level when presenting results.

It’s a small point in an otherwise good presentation.

WCAG 3, the true story!

This was the presentation I helped to give, with Rachael (Co-Chair), Chuck Adams (Co-Chair) and Kevin White (Staff Contact): WCAG 3 overview presentation. It isn’t new content if you’ve been following along at home, but the talk was a chance to provide an overview and open up for questions.

We had quite a few questions which we answered live, and published responses as well.

Packaging accessibility (Amazon)

This talk by Shadi Abou-Zahra, Di Wu, Marilyn Hoss, and Chris Baldomero was talking about Amazon’s push to less paper, less plastic, less friction, more accessibility.

As someone recently asked to evaluate packaging and hardware, it was interesting to see the approach was fairly similar, but they were able to include some user-research for certain things, such as how to include QR codes. For Blind and Low-Vision folks (BLV), paper instructions may not be at all useful, so getting to digital versions is key. Apparently a 20mm sized QR code with 8 raised areas (making a square) was the best way to guide people to the right instructions.

The presentation wrapped up with some useful guidelines for overall packaging and instructions, I don’t know if the presentation will be available, but those guidelines will probably appear (in some form) as part of the EAA role-out.

When accessibility actively harms

This was essentially an accessibility auditor’s howl of protest at weird thing developers have done when trying to make sites more accessible. The presentation is fairly self-explanatory, if you know what Grange Hill is.

There was nothing new from my point of view (having done or QAed 1000s of audits over 2 decades), but it was very amusing for me and at least three other Brits in the audience.

Digital accessibility legal update

There were a few legally oriented talks at CSUN, but Lainey Feingold’s gets the appropriate keynote slot at the start of Thursday.

There’s a good (captioned and signed) video of the legal update. It is US-centric, but even in Europe it’s worth keeping tabs on the US situation as so much software and services come from the US.

My takeaways were that:

- Despite all the problems with the current US administration, quite a lot of accessibility is baked into laws that would take time to remove, executive orders should not be able to affect that.

- There’s a lot of state and local laws/regulations that are still in effect.

- A lack of enforcement (centrally) can have a big effect, as can Judges chipping away.

- Some states have pretty high standards in their regulations, and typically companies work to the most stringent state’s level so they can sell everywhere.

Overall, Lainey’s presence and presentation is somewhere between a therapy session and a call to activism, and very welcome in the current climate.

Redefining Design – Accessibility as a Growth Opportunity

Pawel Wodkowski (from Atlassian but not speaking for them) wants to adjust how we talk to or encourage designers. Compliance doesn’t work for a designer. They want to learn something new, something they haven’t done before, and opportunity to grow.

It was an interesting presentation, I was already onboard with the premise, but he also shared some of the metrics they use internally to establish accessibility adoption across teams.

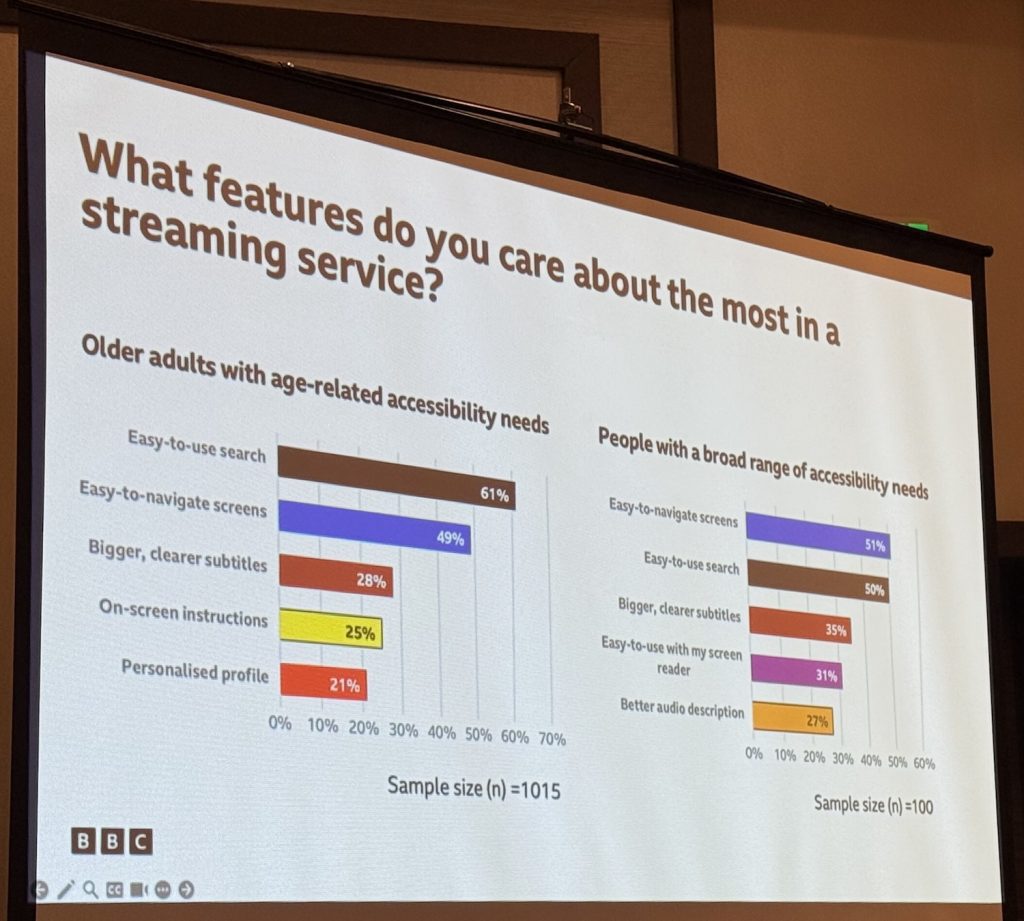

TV Accessibility – BBC

One of my favorite sessions, this was reporting on research, so a meaty topic with lots of detail.

The BBC conducted its first large-scale disability study to explore accessibility in TV applications, focusing on video-on-demand services and HTML5/JavaScript-based apps like iPlayer.

The research used a mixed-methods approach, including surveys, interviews, usability testing, and technical evaluations of how screen readers interact with HTML elements.

The two main groupings shown in the research were older participants (over 1,000), and people recruited specifically for having accessibility needs (over 100).

Content availability is the primary factor in service selection for both sighted and blind/low-vision users. However, people with a broad range of accessibility needs prioritized accessibility features more than older adults did. Device preference also varied, with older participants favoring smart TVs and those with accessibility needs more often using mobile devices and streaming hardware for better accessibility options.

Shared viewing experiences presented unique challenges, as accessibility needs sometimes conflicted. For example, settings often lacked flexibility, preventing simultaneous use of captions and audio descriptions. Users expressed frustration with inconsistent navigation, complex menus, and varying search interfaces across apps. Voice control was another barrier, with some users—especially those with strong accents—finding it unreliable, reinforcing exclusivity in design.

Screen reader support was inconsistent, with different models interpreting content differently. Testing across top-rated TVs and streaming devices revealed unpredictable results in announcing headings, lists, and landmarks, making TV navigation harder than on desktops or mobile devices.

In response, the BBC is developing accessibility guidelines tailored to TV interfaces, recognizing that traditional web standards like WCAG do not fully apply to this context. Their approach is built on three principles: understanding the audience, acknowledging TV-specific constraints, and collaborating with industry partners. These guidelines aim to provide practical solutions for developers, ensuring better integration of accessibility features across TV platforms. The BBC intends to publish these guidelines once they are fully developed, helping to create a more inclusive viewing experience for all users.

Testing Mobile Apps: Tools, Techniques and Best Practices

For these types of sessions I tend to just note the best tips I didn’t already know, but the whole Testing Mobile apps presentation is available, thank you John Lilly!

iOS

- You can turn on captions in the VoiceOver settings, which helps with sharing videos

- Full Keyboad Acess doesn’t play nicely with VoiceOver, one or the other.

Android is ok for this. (I don’t think I’d tried to use them together before!) - Testing for 200% size text: Set to the third notch from the end (this seems higher than my setting, I tested this a while back with screenshots and a pixel measure.)

- Testing contrast: Using the Quicktime player to display on desktop can have a slight colour shift which can throw it off by 0.01. If it’s close, stick with a screenshot.

Android

The main tip was to try the (free) Android Accessibilty Inspector. It’s like a dev tools for Android apps. Gives info on what Talkback would announce. Also shows whether it is native or a webview.

I asked a question about how you adjust focus-styles in iOS and android, John replied that in android you’re looking for the “on-focus” modifier, and change the visual style. In iOS you can change the global accent colour, but that only changes the default colour.

Wrap up

Overall CSUN is a great, quite intense conference (for a someone who’s on the introversion end of the scale), which combined with jet lag made it really tiring. I had just about switched timezone on the day I left.

I’m not a big fan of the Anaheim area, it’s basically a conference center and hotels next to Disney Land, so unless you’re combining it with a Disney trip there isn’t much to see or do locally. However, it seemed that Paul Adam was better at finding places to eat and things to do.

As you can tell from the copious notes above, there are so many good sessions I can’t really complain! There are also booths from various companies where you can try things out. For example, I had a quick play with the built in screenreader, magnifier, and lazer-eye viewfinder on certain Sony cameras.

2 contributions to “CSUN 2025 conference notes”

Comments are closed.